How To Automate Data Loading From Tableau Prep Builder To Your Data Lake Or Warehouse

Our Tableau Data Prep tutorial closes the loop in your data workflows

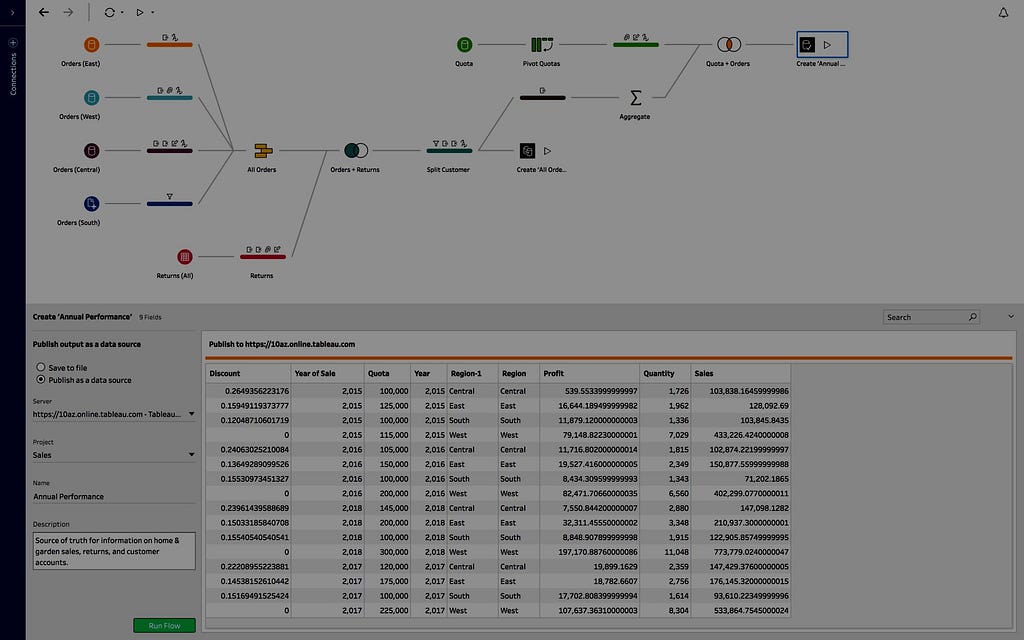

Tableau Prep Builder provides an easy to use interface to combine, shape, and clean “dirty data” so can get to analysis faster in Tableau desktop. The result is that Prep can support an ELT workflow, where data is extracted, loaded, and then transformed via Prep Builder. This can make it convenient for analysts and business users to make adjustments and refinements quickly and without traditional ETL tools.

Unfortunately, if you wanted the outputs of your flow to get written back to a data lake or warehouse, it can be a challenge. While completing the data flow to a target database is a requested feature, it is not yet available within Prep.

Does this mean you are stuck? NO! You can still automate the outputs from Tableau Prep to a target data lake or warehouse in a few simple steps.

Adding a couple of steps to your workflow will allow you to get your workflow output loaded to a target data lake or warehouse.

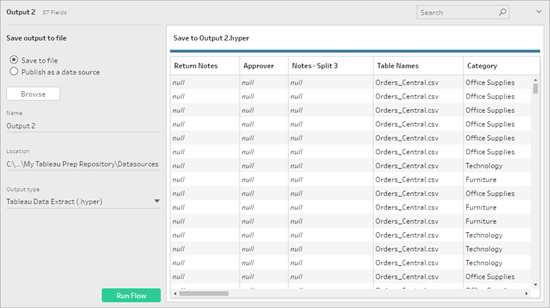

Step 1: Create an extract file

The output pane opens and shows you a snapshot of your data set you will be exporting:

In the left pane, select Save to file. Click the Browse button, then in the Save Extract As dialog, enter a name for the file and click Accept.

In the Output type field, select the output type CSVComma Separated Value (.csv). The encoding of the exported CSV file will be UTF-8. You will use this file to load to your data lake or warehouse.

Congratulations, you now have a processed, clean, and modeled file. Next, we suggest doing a quick QA/QC check on your file(s).

Step 2: Validating Your CSV Output

Before loading yourCSV, we suggest you run a quick validation and schema check. This output step ensures the validity of the CSV file against the RFC4180 CSV reference guide and will also allow you to create a schema for reference purposes.

While the CSV format is standard, it can present difficulties. Why? Different tools, or export processes, often generate outputs that are not CSV files or have variations that are not considered “valid” according to the RFC4180.

Imagine pouring a gallon of maple syrup into the gas tank of your car. That is what harmful CSV files do to data pipelines. If an error can get trapped earlier in the process, it improves operations for all systems.

Invalid CSV files create challenges for those building data pipelines. Pipelines value consistency, predictability, and testability as they ensure uninterrupted operation from source to a target destination.

To make the validation process dead simple, we have a browser-based tool and API:

CSV File Testing And Schema Generation - Openbridge

If you wanted to automate the process, you could use the validation API for larger files:

Once you have validated your CSV, you are ready to import into your data lake or warehouse!

Step 3: Automated imports for your Tableau Prep output to a data lake or warehouse

You have a few choices on how to upload the data. Below are a few reference guides on how to load your Tableau Prep output to a data lake or cloud warehouse location. Once uploaded, your Prep outputs will be available in your target destination in about 5–10 minutes.

- How To Setup A Batch Data Pipeline For CSV Files To Redshift, Redshift Spectrum, Athena or BigQuery

- How To Drag And Drop CSV Files Directly To Google BigQuery, Amazon Redshift, and Athena

- FileZilla: 3 Simple Steps To Load Data to BigQuery, Amazon Redshift, Redshift Spectrum or AWS…

With your Tableau Prep data exported and successfully loaded into your data warehouse, connect Tableau for data visualization work. You can also leverage tools like Power BI, Qlik, Looker, Mode Analytics, AWS QuickSight, and many others for unified data analysis, visualizations, and reporting of your Prep (or any other CSV!) data.

Exploring Automation

If you want to automate the Tableau Prep process, you can fully automate running a workflow by taking advantage of command-line capabilities. There are some limits relating to the upstream data sources (i.e., BigQuery), but it may be worth a look to see if this is a fit for your use case:

Refresh flow output files from the command line

Summary

Tableau Prep exports, and the workflow we outlined ensures your work effort modeling data in your prep flows keeps data fresh in your lake or warehouse. Being able to get your Prep data set into a destination ensures data for analysis, visualizations, or reporting is available to the entire organization.

Once data is resident in those destinations, data is accessible and flows to Tableau server and to Tableau desktop users.

To learn more about Tableau, check out their product page here. Prep has been available since the 10.2.1 version of Tableau. Since they offer a free trial, you can explore prep builder or tableau prep conductor at no cost.

DWant to discuss how to leverage Tableau data pipelines for your organization? Need a platform and team of experts to kickstart your data and analytics efforts? We can help! Getting traction adopting new technologies, especially if it means your team is working in different and unfamiliar ways, can be a roadblock for success. This is especially true in a self-service only world. If you want to discuss a proof-of-concept, pilot, project, or any other effort, the Openbridge platform and team of data experts are ready to help.

Reach out to us at hello@openbridge.com. Prefer to talk to someone? Set up a call with our team of data experts.

Visit us at www.openbridge.com to learn how we are helping other companies with their data efforts.

How To Automate Data Loading From Tableau Prep Builder To Your Data Lake Or Warehouse was originally published in Openbridge on Medium, where people are continuing the conversation by highlighting and responding to this story.

source https://blog.openbridge.com/how-to-automate-data-loading-from-tableau-prep-builder-to-your-data-lake-or-warehouse-eb4235a23588?source=rss----4c5221789b3---4